Think about how we've talked to computers over the years. At first, it was a very rigid, unforgiving process. We had the command line, where you had to know the exact magic words in the right order to get anything done. One typo, and you were met with an error. It was powerful, but only if you spoke the computer's language perfectly.

Then came the graphical user interface, or GUI—the world of windows, icons, and mouse pointers that we all know today. This was a revolutionary step. Suddenly, you didn't need to memorise commands. You could see your options, click on them, and drag things around. It made computers accessible to hundreds of millions of people because it was more intuitive. It was a visual conversation.

But both of these interfaces, the command line and the GUI, share a fundamental trait: they require us to learn the computer's way of doing things. We still have to navigate menus, find the right button, or remember a specific command. We are translating our goals into a series of steps the computer understands.

What if we didn't have to do that translation anymore? What if the computer could just understand our goal?

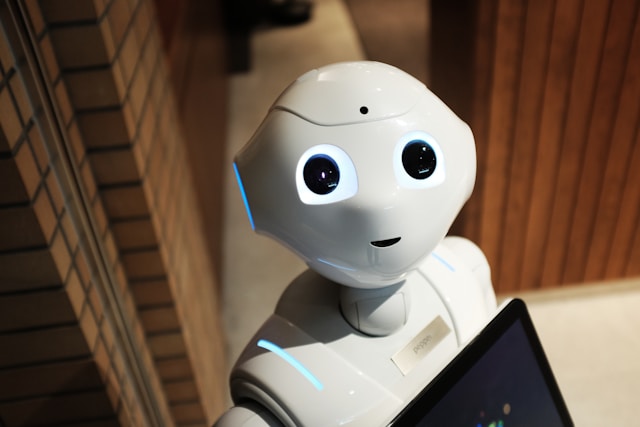

This is the next great leap in how we interact with technology, and it's powered by Artificial Intelligence. AI is poised to become the next major interface. It's not a visual one with buttons and menus, but an intelligent one built on understanding.

The new paradigm is simple: we state our intent, and the AI figures out the steps. Instead of clicking through five different menus to create a sales report, you could just say, "Show me last quarter's sales figures for the eastern region, and visualise it as a bar chart." The AI's job is to understand that request and then perform the necessary executions: query the database, aggregate the data, select the right chart type, and present it to you. It acts as the ultimate translator between your human language and the computer's machine language.

We're already seeing the early stages of this. When you ask a smart assistant to play a song, or when an AI co-pilot writes code for you, you're using an intent-driven interface. You're not telling it how to do the task; you're just telling it what you want done.

This shift is more profound than it might seem. It moves the cognitive load from us to the machine. We no longer need to be experts in using a particular piece of software; we just need to be clear about what we want to achieve. This has the potential to democratise technology on a scale we've never seen before, making complex digital tools as easy to use as having a conversation.

The future of computing isn't about learning more complex systems. It's about building systems that can learn from us. The interface of tomorrow won't be something we click on, but something we talk to. It's the final step in making technology a true partner, one that doesn't just follow instructions, but understands our goals.