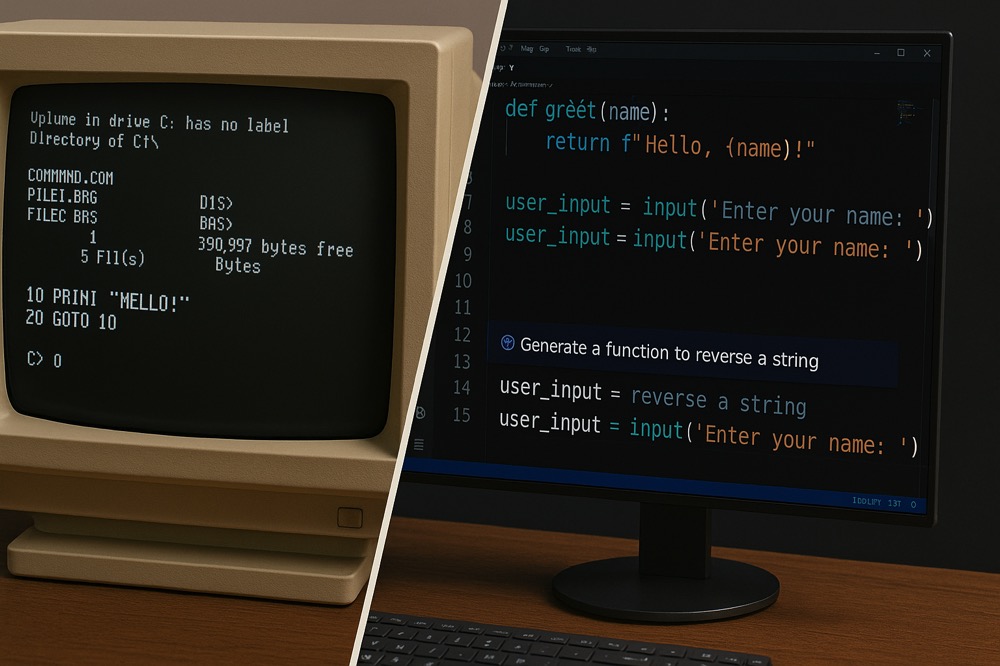

In my last post, I talked about my journey from typing format c: on an old DOS machine to collaborating with AI. Today, I want to share my absolute favourite thing to do with modern AI, something that still feels like magic: turning academic research papers directly into working code.

For years, one of the biggest challenges in technology has been bridging the gap between academia and industry. A brilliant new idea would be published in a dense, equation-heavy paper, but turning that theory into a practical, usable tool could take a team of specialists weeks or even months. You'd have to decipher the mathematics, translate it into logic, write the code, and then spend an eternity debugging it.

Now, my process looks completely different. I'll find an interesting paper, give it to an AI like Gemini, and say, "code this for me". It's a conversation, not just a command. We go back and forth, clarifying ambiguities in the paper and refining the implementation. What used to take weeks of painstaking effort can now be prototyped in an afternoon.

Let me give you a few real-world examples.

Anomaly Detection

I recently came across a paper detailing a new statistical method for detecting anomalies in time-series data. In the past, I would have spent days just trying to fully grasp the mathematical models before writing a single line of code. This time, I fed the PDF to the AI. Within minutes, it had parsed the document and produced a Python implementation of the core algorithm. It wasn't perfect on the first go, but it was a solid, working foundation that we could then test and refine together. The AI handled the heavy lifting of translation, freeing me up to focus on the higher-level task of validating and applying the model.

Customer Journey Mapping

Another fascinating area is using data to understand customer behaviour. There are academic papers that model how users interact with a website or product, mapping out their journey from discovery to purchase. Implementing these models used to be a significant undertaking. Now, I can give the AI a paper on a new journey mapping technique, and it can generate the code to analyse server logs or user event data and produce the kind of insights the paper describes. It dramatically lowers the barrier to experimenting with new ways of understanding our customers.

Building Small Language Models

This is where it gets really interesting. We can use large language models (LLMs) to help build smaller, more specialised ones. I've been experimenting with research papers that propose new, efficient LLM architectures. I can give one of these papers to a large AI and have it help me write the code for this new, smaller architecture. There's a beautiful irony in using a massive AI to help create its smaller, more nimble cousins. It accelerates the cycle of innovation in the AI field itself.

This new capability is more than just a productivity hack. It's fundamentally changing the speed at which ideas can be tested and deployed. The friction between a theoretical concept and a working prototype has been reduced almost to zero. It allows people like me to explore more ideas, take more risks, and bring the cutting edge of academic research into the real world faster than ever before.