The word "bot" often carries a negative connotation, conjuring images of malicious scripts trying to hack accounts or crash websites. While it's true that a huge portion of internet traffic comes from bad bots, not all automated traffic is harmful. In fact, some bots are essential for the internet to function as we know it.

Effective bot management isn't about blocking all automated traffic. It's about accurate classification: distinguishing between the good, the bad, and the "grey" bots that fall somewhere in between. Understanding this spectrum is the first step toward building a smart, nuanced security strategy that protects your site without blocking beneficial traffic.

Good Bots: The Essential Workers of the Web

Good bots are the automated programs that perform useful and necessary tasks. They are generally transparent about who they are and respect the rules you set in your robots.txt file. Blocking them can have serious negative consequences for your business.

Examples of Good Bots:

- Search Engine Crawlers: Bots like Googlebot and Bingbot are the most well-known good bots. They crawl and index your website's content, which is how your pages appear in search engine results. Blocking them would make your site invisible on Google.

- Performance Monitoring Bots: These bots are used by services to check your website's uptime and performance from different locations around the world, alerting you if your site goes down.

- Copyright Bots: These bots scan the web for plagiarized content, helping to protect your intellectual property.

Management Strategy: Good bots should be identified and allowed to access your site freely. Verification techniques, like reverse DNS lookups, can be used to ensure that a bot claiming to be Googlebot is actually coming from Google.

Bad Bots: The Malicious Actors

Bad bots are designed with malicious intent. They are the reason bot management is a critical security function. These bots are deceptive, often trying to hide their identity and purpose, and they are responsible for a wide range of costly and damaging activities.

Examples of Bad Bots:

- Credential Stuffers: These bots use stolen usernames and passwords to carry out account takeover attacks.

- Content and Price Scrapers: These bots steal your valuable content, product listings, and pricing data, often to be used by competitors.

- Spam Bots: These bots flood comment sections, forums, and contact forms with unwanted ads or malicious links.

- Denial of Service (DDoS) Bots: These bots are part of a botnet used to overwhelm a website with traffic, causing it to slow down or crash entirely.

- Inventory Hoarding Bots: Common in e-commerce, these bots automatically add limited-edition products to shopping carts to prevent legitimate customers from buying them, often for resale at a higher price (scalping).

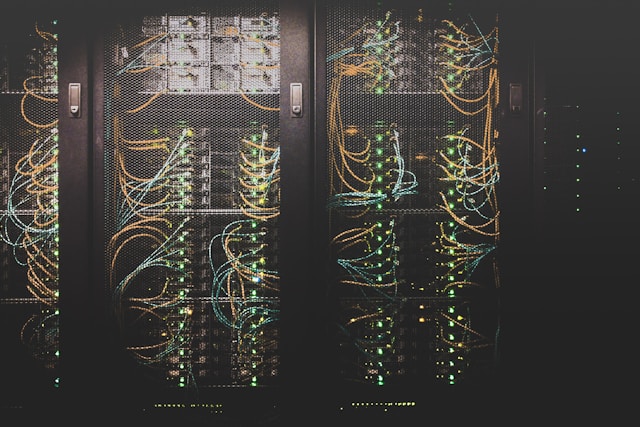

Management Strategy: Bad bots must be accurately identified and blocked as quickly as possible, ideally at the network edge before they can consume your server resources.

Grey Bots: The Nuanced Category

Grey bots are not inherently malicious, but their behaviour can sometimes cause problems. They often serve a legitimate purpose but can become problematic if they crawl your site too aggressively, consuming excessive bandwidth and server resources, which can slow down your site for real users.

Examples of Grey Bots:

- Aggressive SEO Tools: Bots from marketing tools like Ahrefs, SEMrush, and Majestic crawl websites to gather data for backlink analysis and competitive research. While useful, their crawling can sometimes be very aggressive.

- Partner and Aggregator Bots: These could be bots from partner companies or price comparison websites that need to access your data. Their activity is legitimate, but it needs to be managed.

- Feed Fetchers: Bots that collect data for news aggregators or other applications fall into this category.

Management Strategy: Grey bots require a more nuanced approach than a simple allow or block rule. The best strategy is often to rate-limit or tarpit them.

- Rate-Limiting: This allows the bot to continue accessing your site but slows it down to a manageable level, preventing it from overwhelming your servers.

- Tarpitting: This technique intentionally slows down the connection for a specific bot, increasing the cost and time for them to crawl your site and discouraging overly aggressive behaviour.

By classifying incoming bot traffic and applying the appropriate strategy for each category, organizations can build a robust defense that neutralizes threats, manages resource consumption, and welcomes the beneficial automation that makes the modern web work.