Introducing Advanced Rate Limiting

Rate limiting prevents servers from being overwhelmed by too many requests happening in a short period of time. Typically, rate limiting is configured using rules consisting of a filter, for example a path like /login, and a limit on the number of requests a user can make in a given time. eg 10 requests in a minute. If a user exceeds this limit, they are usually blocked for a certain timeout period.

But how do you identify a 'user'? Traditionally rate limiting has used the IP address for grouping requests, assuming that requests from the same IP address will be the same 'user'. Unfortunately an IP address is no longer a viable way of reliably identifying a 'user'. IP addresses are rarely static and are often shared. For example an office network might have hundreds of individual computers in it but present a single IP address for all those computers to the internet. Mobile operators commonly use carrier-grade network address translation (CGNAT) to use the same IP across thousands of devices or users. Bot networks, seeking to avoid security constraints like rate limiting, will rotate their requests through thousands of different IP addresses. This makes rate limiting based on IP addresses a poor choice both from a functional and a security perspective.

Introducing Advanced Rate Limiting

Peakhour is very pleased to announce our Advanced Rate Limiting service. With Advanced Rate Limiting you can create filters using any HTTP request characteristic, eg URI, request method, headers, cookies, country, network fingerprints and more, you can also use the response headers and response code.

For counting requests you can use the following fields for grouping:

IP Address

ASN

Country Code

HTTP/2 Fingerprint

TLS Fingerprint

Any combination of Request Headers

You can use one of those fields, or a combination of them, to get unprecedented control of identifying users.

You can also separate the filter and mitigation expression. For example excessive attempts to /login can be blocked on the entire site.

Putting it into action

We are really excited about Advanced Rate Limiting and its power to protect applications from attacks like Layer 7 DDoS, Account Takeovers, Credential Stuffing, and more! Here are some real world examples you can configure using our dashboard and API.

Protect against general site abuse

Our example website is a medium sized ecommerce store that has page URLs ending in /. It serves Australian clients and typically sees around 100 page requests a minute from non search engine traffic during peak traffic times. Armed with this information we can set up some rate limiting to prevent general site abuse and protect against layer 7 DDoS attacks.

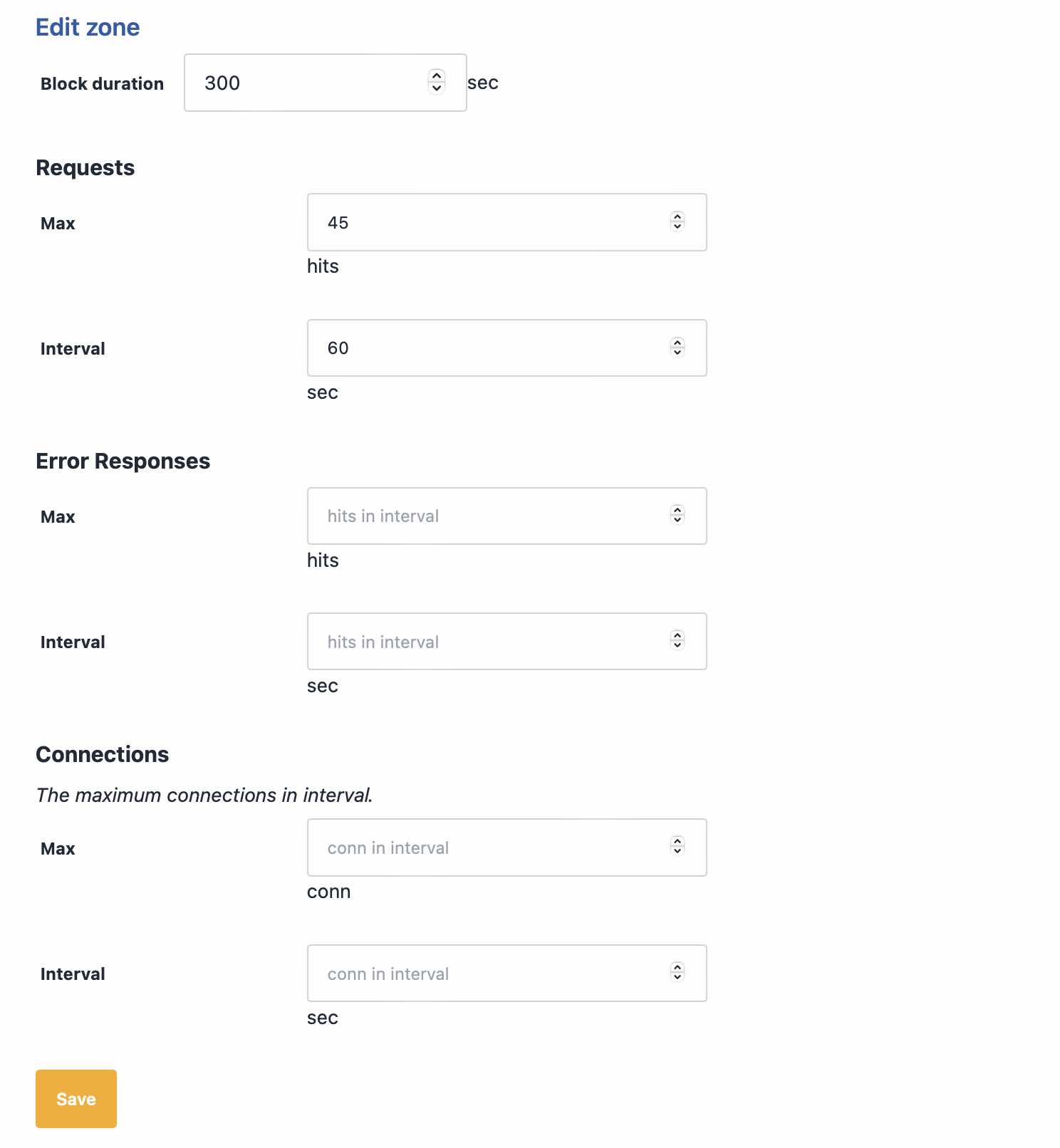

Peakhour rate limiting works by setting up first setting up zones, you specify your request limits in these zones.

Here we've specified a maximum of 45 requests in 1 minute. We're going to apply this limit to page loads only, since our typical maximum for ALL users on this webiste is 100 in a minute, it seems reasonable that a real user is not going to view 40 pages in 1 minute. We could also specify a value for error responses in a minute, an error could be a 404 which a scraper might typically get looking for removed URLS.

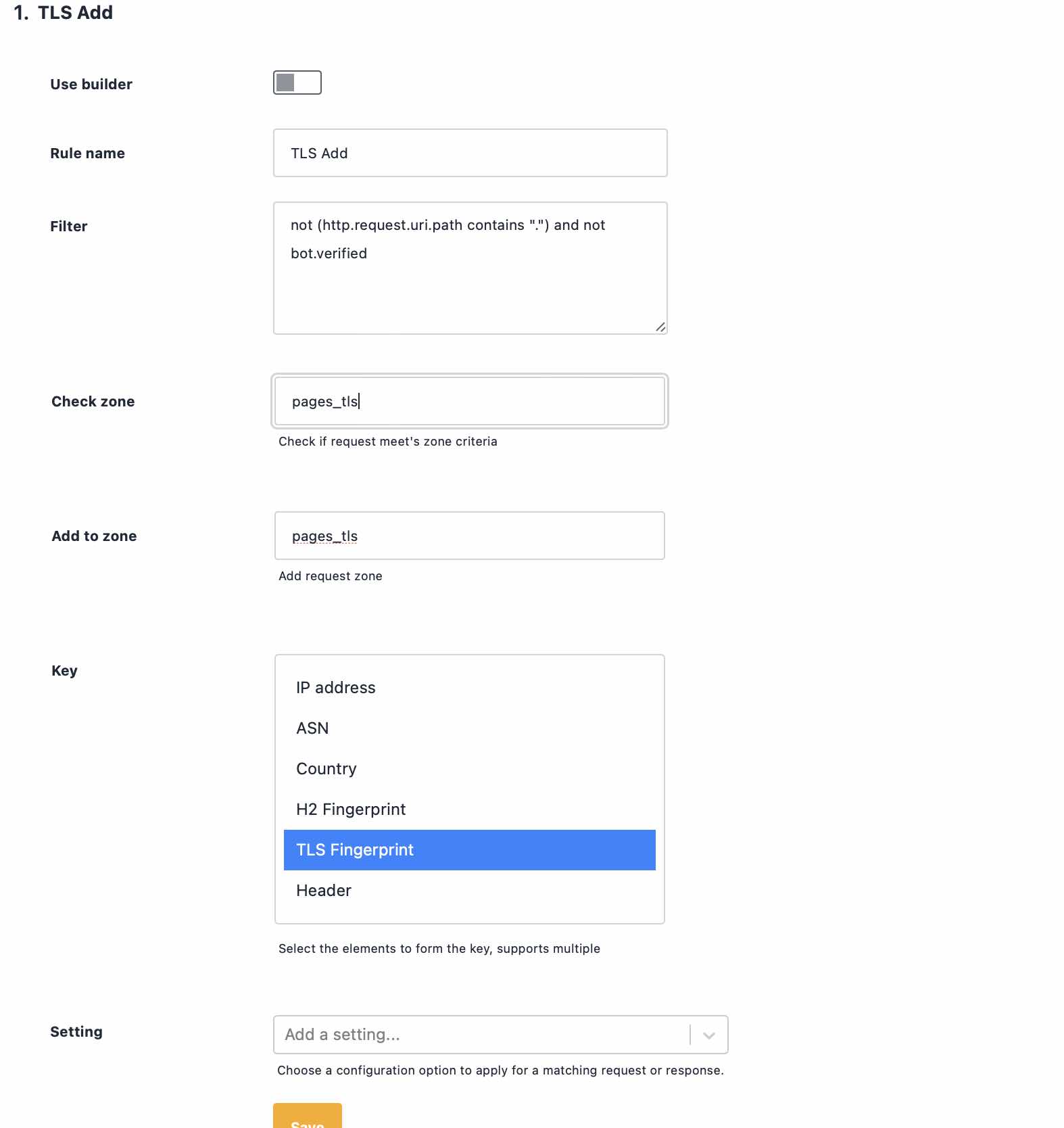

Now let's define our filter and our counter. For our filter we mentioned that pages end in /, so we'll use that, but exclude verified bots to make sure they're not restricted when crawling the site. A verified bot is a crawler like Google or Bing, that Peakhour has verified as legitimate by using reverse DNS to confirm they are who they say they are.

Attackers, scrapers, etc looking to abuse a site will launch an attack using a particular piece of software. That piece of software will have a TLS fingerprint (like JA3) that remains the same, even as the attacker rotates their user-agent, IP address, and other characteristics, so we'll use the TLS fingerprint as our request counter.

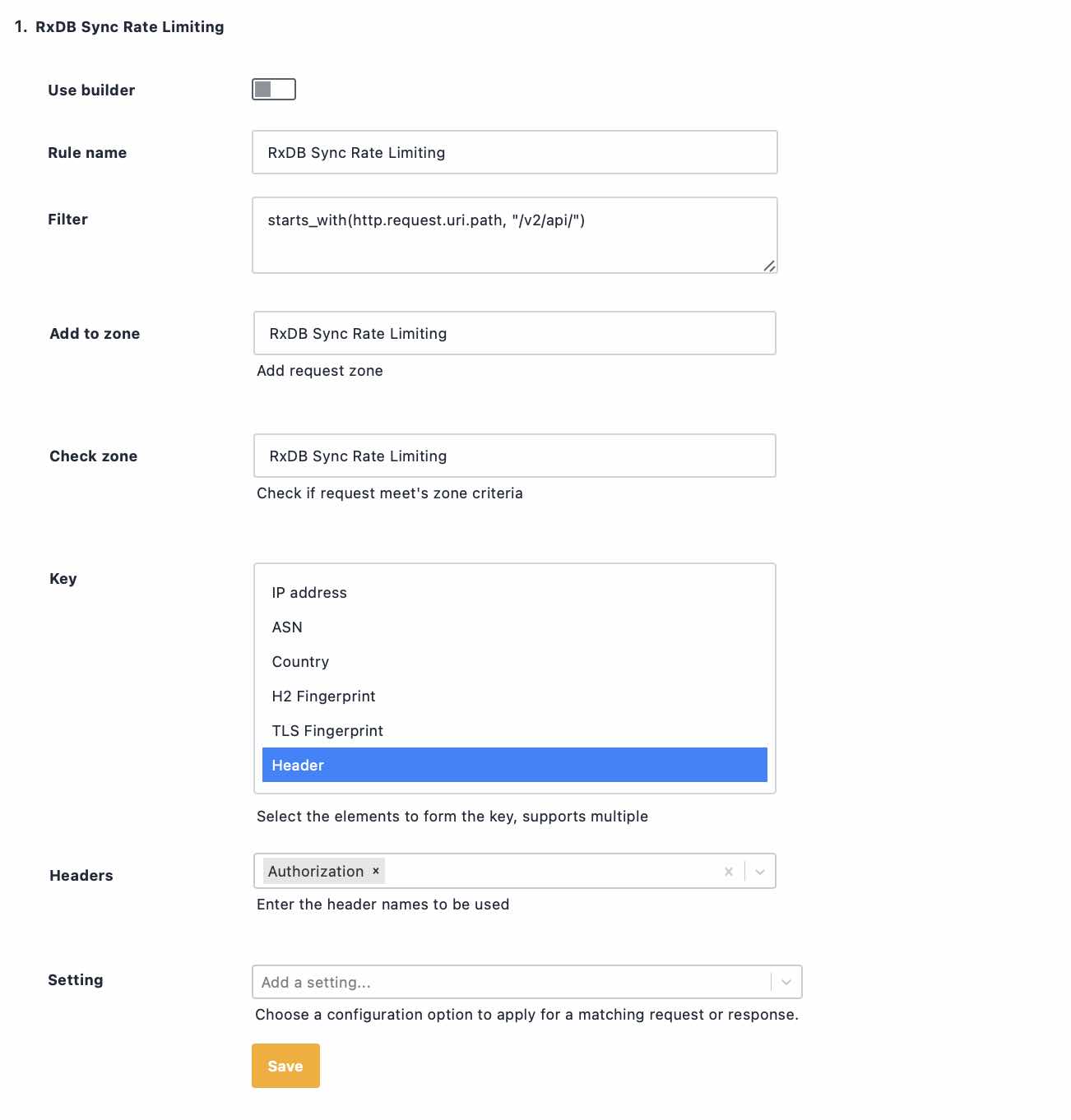

Rate Limit authenticated API Users

It is common for APIs to require an Authorization header to be part of the request to authenticate access. By grouping requests on the value of this header we can rate limit specific clients even if they're using multiple different clients, or if their credentials get stolen.

Protecting from Account Takeovers

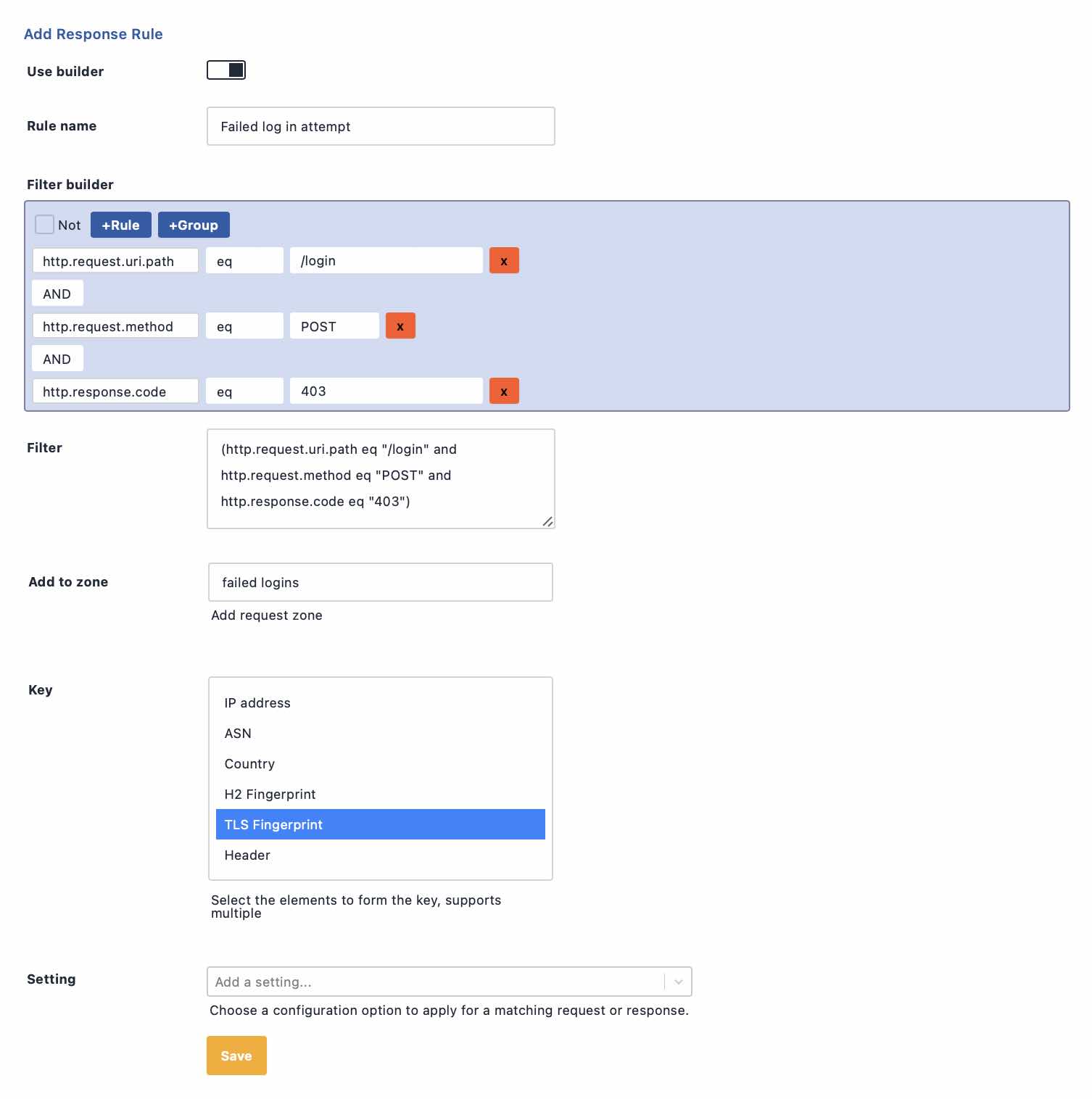

Account Takeover attacks have been in the news recently with several high profile websites being victims. Credential Stuffing and Brute Force attacks rely on attempting lots of logins to identify valid credentials. Along with lots of attempts will be lots of failures. Attackers will rely on software like openbullet to carry out their attacks using proxy networks to constantly rotate IP addresses to defeat traditional rate limiting.

What can we do? Well the program the attacker is using will present a consistent TLS fingerprint. We can make a special rule for our login form that tracks failed log in attempts by TLS Fingerprint, effectively tracking the attacker as they rotate IP address.

If the attack is low and slow we can track failed attempts over a longer timeframe to catch them out by using the response from the server when adding to our counting zone.

Conclusion

Advanced rate limiting is not just an evolution, its a revolution in rate limiting. IP address rotation is the standard amongst attackers and scrapers, rendering the traditional approach obsolete. The ability to count requests against a combination of network fingerprints is a requirement in stopping abuse from scrapers, seo spiders, and layer 7 attackers.